This post introduces our new Blackbox Mini dataset of novice programmer behaviour. First, let’s whizz through a quick bit of Blackbox background, because Blackbox Mini doesn’t make sense if you don’t know what Blackbox is. We develop BlueJ, a beginner’s IDE for Java. All users of BlueJ are asked to opt-in to recording their activity data for research purposes. This data is automatically uploaded to the Blackbox dataset, which collects things like debugger use, testing activity, compile errors, source code edits and more. Blackbox is stored in an SQL database with a documented schema. The Blackbox dataset has been collecting since 2013, and is now quite large (the central table has 4 billion rows). Blackbox access is available to other researchers on request. Phew! So that’s Blackbox.

Introducing Blackbox Mini

Two years ago we ran a survey of researchers who were using Blackbox. One item of feedback was that the database was unwieldy for the common use case. Many researchers wanted to look at source code and compile errors, but were then faced with an SQL database which was so large that even simple queries could take a long time and produce a flood of data. It felt like there was room for improvement in the way the data was presented to researchers. This is the motivation behind a new dataset: Blackbox Mini.

Blackbox Mini is a small subset of the original Blackbox data, with an additional simplified way to access the source code. The intention is that this will make it easier for new researchers to get started. I’ll dig into each of those features in turn.

A small subset

The subsetting is fairly straightforward: for simplicity, I took the initial part of the Blackbox dataset, with the first million events from 2013 (rather than the full 4 billion since). This does mean it lacks more recent data such as BlueJ 4’s change to compilation behaviour, but it was technically by far the easiest way to extract a subset.

The subset is stored in a database with an identical schema to the full dataset. The advantage of a smaller database is that queries should execute much more quickly, and the results are less likely to be overwhelming. Because the schemas are identical, queries developed and tested on the smaller database can be run as-is on the larger database afterwards.

An additional simplified way to access the source code

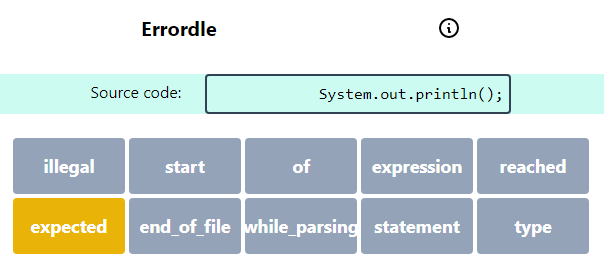

The more interesting part is the new data format, which solves a lot of previous problems. A lot of researchers are attracted to Blackbox to try to analyse the source code and compile errors, which are a bit buried away in our database. And even when you get the source code out of Blackbox, you have the general problem that you need a parser to perform any analyses. So our problems are:

1. The source code is not so easy to access, and common tasks such as “find me all the sequential versions of this source file and the compile errors at each stage” are not obvious.

2. You need to find a Java parser compatible with your analysis language of choice (be it Python, Java itself, Scheme, etc).

3. Not all the parsers are up to date with Java 8 (which added lambda syntax).

4. Many parsers are only designed for syntactically valid code, but much of the Blackbox data is syntactically invalid. It’s hard to analyse the causes of compiler errors if you can’t handle any invalid code.

5. Even once you have a syntax tree, doing analysis on tree structures can be laborious in many languages.

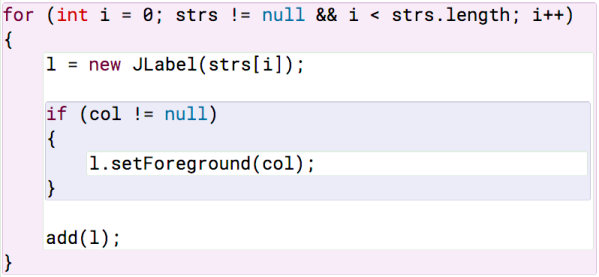

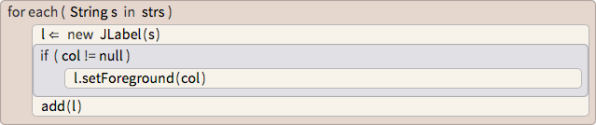

Two years ago I was at a Dagstuhl and met a researcher who was working on a project called SrcML. SrcML takes in Java source code and produces an XML document that represents the parse tree. For example, this fragment (abbreviated for space reasons):

public static void main(String [ ] args)

{

int test1 = 96; //test score 1

Turns into this piece of XML:

<function><type><specifier>public</specifier> <specifier>static</specifier> <name>void</name></type> <name>main</name><parameter_list>(<parameter><decl><type><name><name>String</name><index>[ ]</index></name></type> <name>args</name></decl></parameter>)</parameter_list>

<block>{<block_content>

<decl_stmt><decl><type><name>int</name></type> <name>test1</name> <init>= <expr><literal type="number">96</literal></expr></init></decl>;</decl_stmt> <comment type="line">//test score 1</comment>

This may not seem like it’s doing much besides changing to an isomorphic format, but it actually helps mitigates all of our problems:

1. The format can be a directory full of XML files, no database needed.

2. XML libraries are generally easier to find for your programming language of choice than a Java parsing library.

3. Only SrcML has to be up-to-date with Java 8 (although admittedly it doesn’t support Java 10’s var keyword).

4. SrcML is somewhat robust to syntactically invalid code.

5. XML already has a query language, XPath, designed to allow easy querying of tree structures, which we can use for analysis.

So Blackbox Mini provides source code in a simple file-based format. There is a sub-directory for each Blackbox project. Within that is a XML file corresponding to each source file in that project. The XML file contains a sequential list of historical versions of that file, one version for each compilation attempt, and we use XML attributes and tags to list there the compile errors and the (SrcML-processed) source code for that version.

This means that everything you need to know for the most common use case (looking at source code and compile errors) is in the XML files. At a workshop at the abbreviated SIGCSE 2020 I worked through some examples of performing analysis from Python. Here is a complete Python 2.7 script for counting the frequencies of types used in declarations in all of the Blackbox Mini source code:

import xml.etree.ElementTree as ET

import os

from collections import Counter

acc = Counter([])

for (dirpath, dirnames, filenames) in os.walk('/mini/srcml'):

for filename in filenames:

root = ET.parse(os.path.join(dirpath, filename).getroot()

for name_el in root.findall('.//decl/type/name'):

if not (name_el.text is None):

acc.update([name_el.text])

print(acc)

That takes about twenty minutes to run on the full dataset (I suggest using an even smaller subset during development). The top ten types are: ‘int’: 526817, ‘String’: 172710, ‘double’: 111872, ‘Color’: 42114, ‘boolean’: 34433, ‘ActionEvent’: 15868, ‘Scanner’: 15614, ‘JButton’: 15272, ‘Graphics’: 13729, ‘JPanel’: 13085. There are further issues questions to solve before interpreting this result: the types are counted at each compile, and I believe a few much-compiled long programs are biasing the data a bit. Should you normalise by source file, by project, by user or a combination? What I like is that Blackbox Mini lets me move forwards to the interesting research considerations much more quickly, rather than being stuck on the technical aspects of getting the data.

Summary

My hope is that this new presentation of a Blackbox subset allows more researchers to investigate analysing the Blackbox dataset. The Blackbox Mini dataset lives on the same machine as the Blackbox dataset, so if you already have access to Blackbox, you also already have access to Blackbox Mini (I’ll add some resources for this to our Blackroom community site). If you’re a researcher interested in getting access to the data, send an email to blackbox-admin@bluej.org and we will give you the simple form to fill in in order to sign up.